Loading data to Snowflake via web console

August 1, 2021

It’s been a long time since I have written any content on my blog. I would like to reignite my love for writing technical blogs again and so starting off with something that I am currently exploring.

I have been learning Snowflake and just going to share some basics on what you can do with this cloud-based data storage and analytic service. Would not bore with introductions or any theory on how it works, I would jump into some basics on how you upload data into your cloud storage using the web console of Snowflake.

(Please note that Snowflake does gives you a 30 days of trial access to their services with limitation on amount data and storage you can use which is good enough to get some hands on with this platform. Just google for ‘Snowflake trial’ and it will take you to the right page to get started.)

With no further ado lets get started with a quick look at data upload on Snowflake via webconsole.

Login to your Snowflake web console, where you might have a Database or create a Demo DB as I have in my screen below.

I am to use my ‘DEMO_DB’, and create a table named ‘EMP’. To do this you will have to go to the ‘Worksheet’ tab that would allow you run your queries. Again a lot should be self explored before you try this and most of it are easy and intuitive.

create or replace table emp (

first_name string ,

last_name string ,

email string ,

streetaddress string ,

city string ,

start_date date

);

Come back to the Database tab and click on your database ‘Demo_DB’ and then you see your ‘EMP’ table under it. Clicking on the table should take you to details and list of fields in the table. Then you see the option to upload data “Load table”.

Clicking on it should take you through following steps:

Create a file format if one does not exist with following options:

Click on ‘Load’ once all the steps are done:

And this shows up as success in load:

My sample data for your reference from EMployee2.csv file:

Lets query the data and look for the sample data uploaded. Please note that for small amount of data the Web console is ok, but for larger files it is advised to upload via the Snowflake CLI (SnowSQL command line) which I would try covering my next write up. Thank you!

Informatica – Registering a Power Exchange plugin

July 29, 2016

Hello all.. it’s been a very long time since i had come up with a post on my blog. Finally decided to push myself to get one simple post to have it kick started. So giving a simple post which i believe helps more and keeps me going with sharing more and more.

This post of mine shall cover an activity of administration on top of Informatica installation and more in specific to Power Center and Power Exchange. A recent activity in my assignment where we have had a recent Upgrade applied on the existing version of Informatica from 9.1.0 HF6 to 9.6.1 HF4. And so i had some really good experience and would like to bring them all to every one of you with a hope it helps few of us. I would cover a series of posts to cover all my experience and knowledge on this.

To start with, installing/configuring Plugins on a new installation or on a Upgraded version of your Informatica. When you install Informatica you either have some Native/in-built plugins or you will be forced to install some of them as external plugins which are again provided by Informatica.

For instance i had to install new version of Power Exchange for SAP and Essbase connectors to my Power Center Upgraded version of 9.6.1.

Step -1:

Install the version of Power Exchange setup that can be downloaded with help of Informatica’s GCS team. This shall be a regular installation setup which shall place the respective .xml for the Power Exchange plugin on your installation folder path of Informatica server – <Installation-folder>/9.6.1/server/bin

Step-2:

Logon to your Admin console and on your Repository settings, change the Operating mode from ‘Normal’ to ‘Exclusive’. Allows no user to access the Repository while your admin activity is carried out.

Step -3:

Registering Plugin through Admin console

This is pretty much a straight forward method, where you login to the Administrator of your Informatica with the right access.

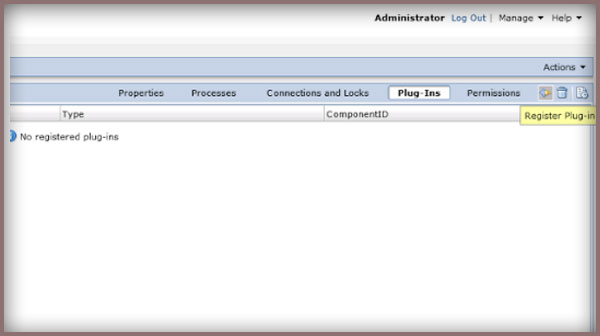

Then on the Repository settings, at the right most pane on the ‘Plugin’ tab, would show up an empty page if you have had no other plugin installed. Click on the Plugin icon/symbol on the right top of the pane as shown below –

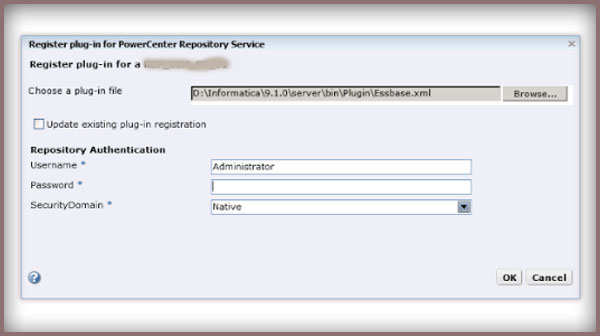

Then comes the pop up to allow you browse your plugin which should be on your Server/bin/ path as said earlier. Select and continue on with the right authorized user to complete your registration of the Plugin.

Register Plugin through Command line

This again would require bring your Repository to ‘Exclusive’ mode and then logging on to your Server machine.

To start with open your pmrep executable available at the server/bin, and connect using the administrative account and this shall have a syntax as below –

pmrep connect -r REP_name -d DOM_name -n Administrator -x admin_password

This shall successful connect your repository as Administrator.

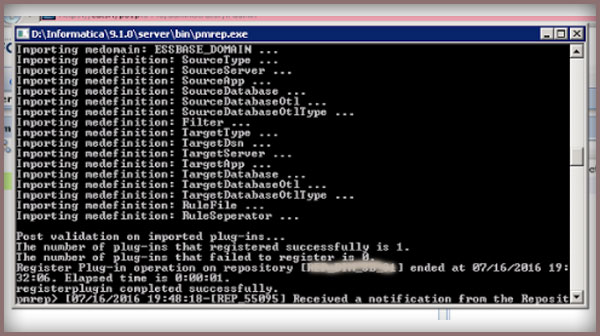

Find .xml in the following directory: $INFA_HOME\server\bin\Plugin which would come up after your earlier Power Exchange installation. And now you can execute the RegisterPlugin command with the reference to your plugin.xml file,

pmrep registerplugin -i <$INFA_HOME\server\bin\Plugin\plugin_file.xml –e –N.

where ‘-e’ will be used in case where you want your registering has to update on top of an older version of the same plugin (you could see the same as checkbox when you did through admin console).

And ‘-N’ is required when you either install it on an already existing Repository and has just been upgraded, or if the Power Center already comprises the Plugin and only needs a registering or if you are registering an already existing plugin just to get the additional feature from a new version/upgrade of the plugin.

This shall successfully register your already installed plugin successfully and let you avail the feature of the respective Power Exchange connectivity.

Hope this helps, happy learning and sharing all.. 🙂

Data Warehousing – Types of Facts you must know..!!

March 21, 2015

Just a note on Data before we get started, i loved this quote which i recently got to read –

“Data are not taken for museum purpose; they are taken as a basis for doing something. If nothing is to be done with the data, then there is no use in collecting any. The ultimate purpose of taking data is to provide a basis for action or a recommendation for action.” [an i quote from W. Edwards Deming, a renowned Statistician]

Before we could jump into details of types of Facts, let’s get some clarity on what a fact table is and what way it plays a role in a Warehouse. In the traditional Data warehouse model we have the Facts and dimensions, where the Facts play a major role giving more meaning and information to data and for the end users to get to use it on trend analysis and on many more reporting purposes.

As is noted and said by Kimball – “Fact tables are the foundation of the data warehouse. They contain the fundamental measurements of the enterprise, and they are the ultimate target of most data warehouse queries.”

Additive Fact tables

An additive Fact table is one, which has the transaction data giving the information on different dimensions of each transaction and the measurements (Amount/hours/value) on each. This is a kind of a fact table that most of the Data model would have used, with the measurements giving way for plenty of analysis and information. This is a kind of fact table which can bee summed/aggregated through all the dimension columns to get a grouped by analysis and result giving the summed Measurement/amount with respect to different dimension combinations.

Example is a sales table, where you have dimension like Customer, Date of sales, Sales ID and the measurements like Quantity and Amount/Price. In this case you can Group by a customer or the Day of sale or even by the Sales_ID to get an aggregated Amount and Quantity or both.

Semi Additive Facts

A semi additive Facts are the one which comprises of Dimensions and the measurement information, and the facts are summed up with respect to some of the dimensions and can not be summed on the rest.

A good example can be the banking balance tracking table, that gives the debit and credit information and the balance post any transaction of the respective Customer. Here it would not make any sense to sum up the amounts with respect to the date, which would be meaningless measurement. Whereas the same can be helpful to get the track on the balance amount on Customer wise.

Non Additive Facts

A non additive Fact would be with the measurements and dimensions where there would be no summing/aggregation that can give any meaning to the data.

An example can be the table giving information on the Discounts with respect to the Products and on the respective dates. Here there can be no meaning made with the Discount being summed with any of the dimensions to make the data informative.

Factless Facts

The fact tables, completely built on the Dimensions only are called as the Fact-less ones. These facts basically help in getting the combination of different dimensions to make a meaning with the intersection of them.

A very good example can be a table giving information on the Promotional code for each product on a date, here all are dimensions and they would still make sense in the end user’s point to build it as a fact table bringing the Product for a date with the right Promo code assigned to it.

Hope this was helpful in understanding the basic types of facts that we can build as per the need and requirement on our data model. And the decision to pick the right Fact type has to always be a based on the scenario and the need of the end users (Reporting/Analytic).

Hello All,

Hope you all had a very great Christmas and awaiting to kick off a new year soon. Before we kick off, a happy new year to all of you in advance..!!

Just when i was waking up to welcome a sunny Saturday, i had a thought mugging around me and telling me to do this. Yes to write a post on what i had been badly attacked by, it was indeed not something of a physical attack 🙂

So without further dawdling let me explain how hard i had to fight against the Target Update Override which helps me customize my Update strategy used in Informatica allowing us to Update any target table despite not having the right Primary Key combination for the Update. I have done this before and have done it with a blunder every time which taught me something right but time and again i have done the same repetitively. I just then had thought to help other fellow developers avoid this and so i am writing this blog post to help you out with the Target Update Override usage.

Probably when you go for an Update over your target, you would use the Update Strategy which would link to your target ports, the Primary key columns on which Informatica would perform an update on the rest of the columns you will be linking with the target. So there would also be a scenario where you will wish to update few fields with a conditional check over the other fields (non primary key columns).

There are two ways of doing this,

Method 1 –

Make target structure change on your Informatica side to make the Ports/columns on which you will be performing a check to update the row. This means you will have to change these check fields to have a Primary key property enabled on the Informatica end and then use the Update strategy transformation as a usual way.

Method 2-

To script down a Update sql override in the target. It’s easier to be done with a usual Update query syntax to be written on your target object in the mapping and there is a strict syntax to be followed which also means a very proper spacing to be used between the Operators and the sql literals. By default, the PowerCenter Integration Service updates target tables based on key values. You can override the default UPDATE statement for each target in a target definition. And as discussed we would like to Update on the basis of the Non key columns and this can be done something like below,

Sytax:

UPDATE SALES_TBL SET EMP_NAME = :TU.EMP_NAME DATE_SHIPPED = :TU.DATE_SHIPPED TOTAL_SALES = :TU.TOTAL_SALES WHERE EMP_ID = :TU.EMP_ID

Because the target ports must match the target column names, the update statement includes the keyword:TU to specify the ports in the target transformation. If you modify the UPDATE portion of the statement, use :TU to specify ports.

I am just adding this part to save your time. I had done a very silly mistake while composing my Update override query. For a very long time my session was failing and i was unable to figure out what went wrong with the session failing stating i had my Update query wrong.

Revisiting it almost like 100 times changing all that i could and unable to get what went wrong 😦

Finally a big brick hit my head and i just copied my Update sql to the notepad and found that i had no space between WHERE clause and my operand, and i was not able to figure this out as i had it going to a next line character, just as is in below picture.. which i would think was just like a usual word wrap..!!

Hope this helps you and saves your time. Thanks for reading the post and share as it’s fun to learn and share 🙂

So, Finally here I go with an article on CDC (Change Data Capture) implementation through an informatica which had been a long waiting from my side to be posted.

Requirement – To track on the data change in the source system with the earlier data pulled into the warehouse and to track on Updates, new Inserts and Delete on the data that is no more in the source. I would continue on this CDC implementation with an another article on loading of data forward into a table to save the change history for the data user to keep a track on the change history.

Mapping Design:

The mapping I have come up with for the CDC logic would be as below,

(Click on the image for a better view)

The mapping would involve the Staging/source table which would be a daily truncate load from the source system as is with the current status on the transactions from a source. And the data are then to be sorted with respect to the primary key columns, sample table used would be as below. The sample implies on the target CDC table to have the same composite primary key columns.

Source Table:

Target Table:

The mapping would involve a simple logic to have a joiner (Full Outer Join) with the source table and the Target CDC table and to check for the matching and non matching records on the Primary key columns as is illustrated below,

This being a Full Outer Join, enables the loading of all matching and non matching transactions to flow through and then the following three expressions should be defined to check on the Change type,

Flag_DELETE:

ISNULL(TransactionID) AND ISNULL(ProductID) AND ISNULL(ReferenceOrderID) and ISNULL(ReferenceOrderLineID)

Flag_INSERT:

ISNULL(TransactionID_old) AND ISNULL(ProductID_old) AND ISNULL(ReferenceOrderID_old) AND ISNULL(ReferenceOrderLineID_old)

Flag_UPDATE:

TransactionID=TransactionID_old AND

ProductID=ProductID_old AND

ReferenceOrderID=ReferenceOrderID_old AND

ReferenceOrderLineID=ReferenceOrderLineID_old AND

(TransactionDate != TransactionDate_old OR

TransactionType != TransactionType_old OR

Quantity != Quantity_old OR

ABS(ActualCost – ActualCost_old)>1)

Flag => IIF(v_Flag_Update=1,-1,IIF(v_Flag_Insert=1,-2,IIF(v_Flag_Delete=1,-3,0)))

where ‘0’ is for no change records, and then to use a filter transformation to filter out all the no change transactions with Flag=0.

Then a Router to define the grouping as per the result of the above expression on checking if the Transaction is an INSERT, UPDATE or DELETE.

(Click on the image for a better view)

And the Update strategy transformation for each to perform and Update or Insert for each, and to note even for DELETE flags I will be going for a DD_UPDATE, which would update the CDC flag for the transactions not available in the source system to ‘D’ in the target table.

Also to note, that the Delete flow, would involve pulling the ports of CDC source table from the Router to the target and Update on the key column matching records with CDC_Flag=’D’.

And finally to make the session level changes for each target to define ‘Update as Update’ or ‘Update as Insert’ for the targets respectively. Upon running the mapping for the first time all transactions would be tagged as INSERT and then the proceeding runs will then depend on the source system changes to be captured through the CDC logic of our mapping.

Note – The DELETE transactions are the one which are no more in the source table, and hence these once after being tagged as ‘D’ for the CDC_Flag can be deleted from the target CDC table as well after making use of the change on to the History tracking table which will be covered more in detail with the next article shortly.

I will shortly be posting another phase of this topic on CDC and on tracking of history on a transaction change in your warehouse with the CDC. Hope you enjoyed reading this and helps you. Please post your comments and feedback for a better work and clear ideas. Thanks! !

Informatica – Do not use the ‘!=’ in expression

July 13, 2014

This was something i had faced couple of times in my development adding a logic to my mapping and i have always done the same mistake. Now this is why i have decided to share this with other developers, avoid using the ‘!='(Not Equal) operator in your expression as much as you can.

Let me quickly put down the real issue i had faced with my experience getting to use ‘!=’ operator and this was some real interesting learning experience getting to understand what and how really your data is being processed by the Informatica’s Integration Service.

I had a requirement, where in i had to populate 2 targets satisfying 2 different logic based on a column. So as an illustration i am taking the below records,

| SID_No | Group_Nm | Amount |

| 1 | A | 1000 |

| 2 | B | 1500 |

| 3 | A | 2000 |

| 4 | NULL | 1200 |

| 5 | NULL | 1000 |

| 6 | C | 2000 |

| 7 | C | 3000 |

Now my need for the day is to populate to 2 targets as,

Target_A -> where Group_Nm=’A’

Target_B -> where Group_Nm !=’A’ and Group_Nm !=’B’ (expecting all other records other than those in Target_A and are not

‘B’)

So as the above logic says, i had straight away gone to put down my expressions as below, which would take all Group-A as Flag=1 and remaining records with Group name != ‘B’ would be expected to go to Flag=2.

IIF(Group_Nm = ‘A’,1,

IIF(Group_Nm != ‘B’,2,0))

I am expecting

1,3 to be tagged as Flag=1

4,5,6,7 to be tagged as Flag=2

Now what have i got in my target is,

1,3 tagged as Flag=1 flows to Target_A

6,7 tagged as Flag=2 flows to Target_B.

So it was quite clear that the issue was with the records having Group_Nm as NULL. That clearly gave a clue that the expression was not handling the NULL values as expected. So the root caus ebeing the second IF condition checking for Group_Nm != ‘B’ and hence scanning the left out records 2,4,5,6,7 it had got 6,7 satisfying the condition so what really happened to 4,5 was ‘as it happened to have NULL values these were ignored for a check and so were dropped out’ and so i had them flagged as ‘0’.

So quickly understanding all i had a change in the expression was to keep it as below,

IIF(Group_Nm = ‘A’,1,

IIF(Group_Nm = ‘B’,0,2))

So finally i had my results as expected with the right records flowing to the respective targets. Hope this made so good understanding to you. Please drop in your questions or suggestions if any.

Informatica – Best Practices, Do’s and Don’ts..!!

April 26, 2014

Hello all, I am today going to discuss and brief on some key best practices that i have followed or have come across in my experience with Informatica. Most of the best practices are specific to keep low the ambiguity and make it easy for every other developer around you to understand or share the work and few with intention to have a better performance without making things complex. Let’s quickly go through them,

Shortcuts (Shared Folder) – The uses of shared folder to use the sources/targets around multiple folders keeps your repository and objects less and clean. A shared folder allows you make the best use of re-usability in Informatica and maintains consistence and standard and keeps the code in tact with less number of duplicate objects being created.

Re-usability – It’s always good to take advantage of re-usability options on Informatica with the Mapplets, Worklets, reusable transformations, tasks etc. which highly reduces effort on design and keeps things simple.

Simplicity – This is the practice to keep your mapping/code as much as simple as you can, it can be good to have multiple mapping to do things rather than one to do something more complex. Mapping or your code should be easier to understand and debug in the future.

Source-Qualifier – It’s the place where you can tap your required data and filter out unnecessary column /rows that are no were relevant to our requirement. It’s always good to tap out the data at SQ rather than allowing them to be processed and being filtered at a later part of your mapping.

Filter Transformation – It’s the best you use your Filters as close as possible to the Source, to allow lesser data to travel across your mapping and thus improving the performance of your mapping with minimal data.

Most important of all, avoid complex expressions and logic on your filter condition, it’s always good you make them all in your Expression transformation and decide with a Flag port that travels on to the Filter and makes the Filter transformation far too simple and better.

Expression Transformation – It’s always good using more operators (||,+,/) rather than Functions where ever required, and also the processing and loading of Integer type data is always the faster compared to String type. Good to make use of local variables and keep the calculations as much as simple as possible with multiple variables being used to breakdown the complex calculations to less redundant and easy to understand one.

Joiners – If possible, join the data at the SQ, and in case of must to go for a JOINER transformation make sure you use the Source with less records used as the Master and the other source as the Detail which has more records to process.

Parameterize your code – Wherever possible it’s always the best thing to do is to parameterize your code, this helps in making the code easy for any future changes to be made with no change on the Informatica code. Avoid hard coding values, and parameterize them which can ease for any changes going forward.

SQL Query – Always the most important thing to check for is how good your SQ or any SQL related process will work on the DB side. Your source/target relational object on the DB side should be good enough to handle any UPDATE/INSERT/SELECT process more effectively. Go for the SQL execution plan, and accordingly add Indexes (Clustered/Non-Clustered) as and when required on THE DB table.

Router transformation – Always use a less complex conditional expression on the Groups of your Router, avoiding any calculation/function being performed row wise at your router eating away more time and memory in running your session. Instead do your conditional checks for grouping on the expression prior to loading to the Router and just keep it to a Flag which decides the Grouping on the Router making it perform at its best. Never use a Filter after a Router, which makes it logic less use, as the Router is to perform a Group, and hence the filters can be added alongside the logic of your Grouping (which again can be handles at an expression in getting the Flags to group by).

Lookups – Also try using unconnected lookups wherever we can as it gives space for re using the same Lookup multiple times and can use the same value to test/calculate in expression. Use Filter on your Lookup source to avoid Caching in unwanted data thus optimizing the Cache use improving the performance. Try using a Joiner instead of a connected lookup wherever possible.

In case you use a Unconnected lookup and wish to return multiple values to expression, then concatenate the columns and after returning the value go for splitting the values J

Union Transformation – The lesser the Union transformation, better is your mapping performance. Try avoid using Unions and if used keep the number to minimal by trying to Union the source on SQ itself as the Union consumes a lot of Memory and time in processing being an active transformation.

I will keep updating this Post further more with some more points on best practices in Informatica i might come across. Please do share if you have any and that can help people around here. Learning and Sharing is a joy 🙂

This was something of a unexpected bug found in our code dealing with a mapping variable and i was unable to figure out why was the variable value not taken up as expected.

The issue was when i was trying to pass a value from a port to the Variable and use the same in the Workflow. So while i was checking at the Session level, the value was different whereas the same Port value flowing to the target was looking correct.

With some repeated run and test, i was able to catch what had really went wrong. Here i used a mapping variable defined and i had my only option to set the Aggregation option as either ‘MAX’ or ‘MIN’. So went on to select ‘MAX’.

Then comes assigning the port value to the variable with SETVARIABLE(). In doing so i was assuming the value on every run shall just be assigned to the Variable here. I was completely wrong with this assumption.

As referred in the Informatica content-Help,

“At the end of a successful session, the PowerCenter Integration Service compares the final current value of the variable to the start value of the variable. Based on the aggregate type of the variable, it saves a final current value to the repository. Unless overridden, it uses the saved value as the initial value of the variable for the next session run.”

Breaking this down for an easy understanding – The Variable $$Var will first be assigned the default value, then on the successful run when we try setting a new value say here default value was $$Var=10 and i am having a new value as ’15’ and my aggregation was type ‘MAX’ and so compares the new value with old and finds the new is greater value so $$Var=15 is assigned.

And now this value $$Var shall not change or be over written until i get a value >15. So was my issue where the new values were not assigned as expected with SETVARIABLE().

Thus my solution was to set a minimum value before the session starts, as simple as it is to just make it a override value through Parameter file (.prm file) and so i set it as ‘$$Var=0’. Now my new value gets always the greater value and is assigned to the variable with the latest 🙂

Hope this makes a good understanding, please put down your questions and comments if any. Thanks!!

Informatica PowerCenter: Working and Architecture

February 27, 2014

Lets have some lights on to the basic Architecture of PowerCenter which is an ABCD learning for any Informatica Developer. Its good we know how and where our Infa objects gets created and how the way it runs in the back end.

So as we might be well aware of the multi-tier architecture of our Informatica, having a Client Server model with different services running together for what we just see on the PowerCenter client as objects created, deleted and run. As a developer, we must be well aware of the Repository database and its purpose, saves all the meta data on the objects in Informatica.

As in here we have the two major services, Repository Services and the Integration Services. The Repository services helps in communication between the PowerCenter components responding to the PC Client and getting in the Repository Database with a native driver connection for the meta data.

PowerCenter Client uses native protocol to communicate with the PowerCenter Repository Service and PowerCenter Integration Service.The PowerCenter Client uses ODBC to connect to source and target databases.

How it works:

When we use the PC Client, and we create a Domain connection with a gateway from the client tool we have the service manager in the server which then connects to the PowerCenter Repository Services with a TCP/IP connection established. Also we define the Repository Database which is defined while installation of Informatica and is connected with a native driver connection from the Repository services to the Database.

Every time a job/task runs it is run through the Client connecting with the Integration service which in turn interacts with the Repository services to get the Repository objects from the Repository Database. It uses TCP/IP to connect to the associated PowerCenter Repository Service and retrieve metadata.

The ODBC Connection in the Client/Integration services:

When ever we try creating source/target on our client machine we have an independent ODBC connections created through the client machine and the Integration Services runs with the similar way by connecting to the source through a ODBC/Native connection established and defined already.

The Flow:

The flow runs as, when we connect to a client (eg. Workflow Manager) a Repository connection is made with the Repository services which retrieves meta data connecting to the Repository database as per the request from the client. Then as we run a workflow, the Client connects to the Integration services and the Command is fetched by the Integration service to connect with the Source/Target through an ODBC connection to fetch the data or write the output. The IS then interacts with the Repository services for the Infa object metadata and the process runs as defined in the mapping in the Integration services.

Hope this gives a bit of clarity on the backstage working of our Informatica. Do let me know your suggestions or corrections and queries on this article.

Informatica – Post/Pre Session Variable Assignment

February 25, 2014

Hello Friends, Time for some learning. This time i have taken up the Informatica Variables and Parameters which i am very much fond of 🙂

This time i have taken up something that i came across in one of the development my team was involved in, and there was a need to pass a value from one task/session to another as we had a check with respect to the value we get from one session to be used going forward in the process.

For instance, my requirement is to check on the count of records from session_1 and then to count on records in session_2 but with the same counter (increment from where the Session_1 count ended) ending up with the total count of records. Something like, i had 100 records in session_1 and then i have to count from there as 100+1 and so on for the session_2. Looks simple, but quite a nail biting and challenging experience while we try getting it in Informatica!!

And then we came across this approach/feature available in the Informatica ‘Pre-Session/Post-Session Variable Assignment’. Which was all about the assigning of Variable values between the Sessions and it’s Parent Worklet/Workflow and vice-versa. Interesting right? If not clear lets go with a step by step illustration for a better understanding on this.

1. All we need to do is define a Counter variable/Parameter $$v1_Count for the session_1, and then our session runs to populate $$v1_Count as a usual Mapping Variable/Parameter does.

2. Next is, to assign this value to the common Variable (that acts as an intermediate between two of our sessions) which will be the Parent Worklet/Workflow Variable defined as any other Variable in the Edit Workflow/Worklet Options. In my case i have Workflow variable $$wf_v_Count defined.

3. Now in the session_1, Components tab -> lets edit the “Post session Variable assignment” option (it can be on failure or on success),

(i) Post-session on success variable assignment

(ii) Post-session on failure variable assignment

and i define it as – “Parent_Workflow/Worklet_Variable = Mapping_Variable/Parameter”

i.e., $$wf_v_Count = $$v1_Count

So here we have the $$v1_Count value open for any task,session or Worklet under the same parent can take up and use it for processing.

4. In our case, for the next session_2, i have to go for Components tab -> “Pre Session Variable Assignment” to be defined to assign the value from the $$wf_v_Count to the respective session’s Mapping Variable/Parameter $$v2_Count.

So we define it as “Mapping_Variable/Parameter = Parent_Workflow/Worklet_Variable”

i.e., $$v2_Count = $$wf_v_Count

The same can be used all over the Parent Workflow/Worklet, in any decision tasks or on link conditions. Thus we have now passed on the value from one session to the other and can be processed thereafter. Hope this was an interesting and helpful piece to you.

Do post your comments on any corrections or queries with respect to this. Share this if it can help someone.